This project was part of my two months internship in the Laboratoire des Sciences du Numérique de Nantes (LS2N) in Centrale Nantes. It was my first encounter with Robotic Operating System (ROS). I had the opportunity to experiment with robotics on the Turtlebot and with LiDar and Kinect technology. My internship was centered around cartography and Simultaneous Localization and Mapping (SLAM).

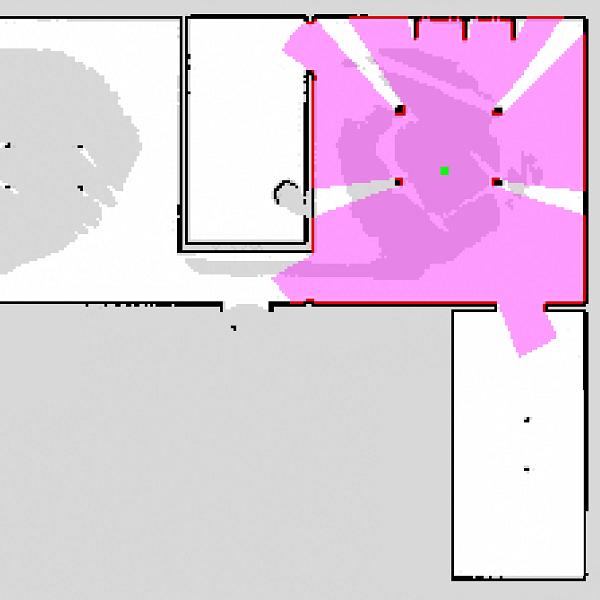

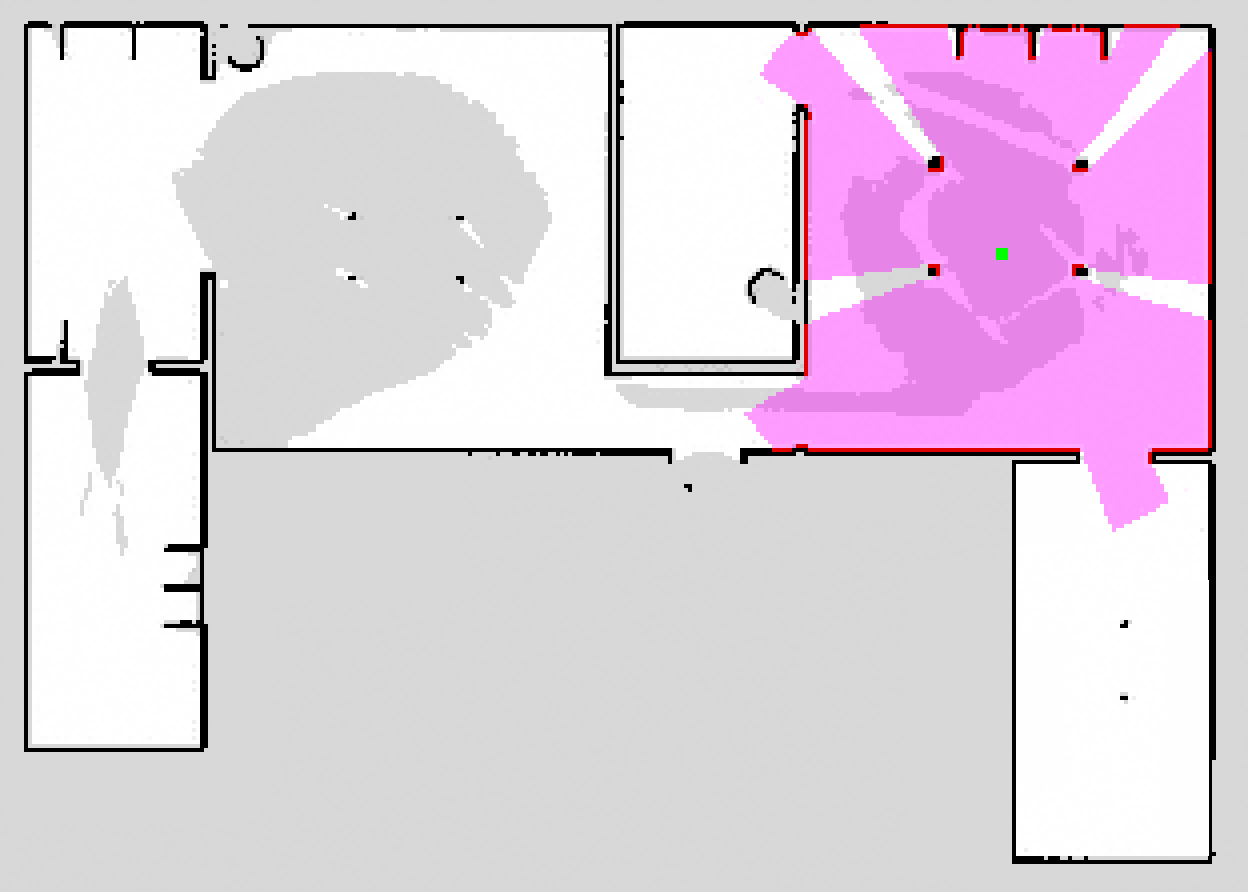

My first task was to develop a simulation of a Turtlebot and its LiDar in a previously generated map. The map is just a rasterized image of the walls of a room. I programmed a ROS package taking the command of angular and linear velocity and displays the robot moving and the LiDar data. The package is made of three nodes : one reconstructing the odometry (positioning) of the robot by integrating the command, then one using the odometry and openCV2 with the provided map to simulate the LiDar sensor data through raytracing and displays this data as a pink circle and red borders on the image matrix, and the final node to display this image in a separate window. The middle node was coded in C++ for performance reasons while the odometry and image window ones are simple Python scripts.

Simulation of the LiDar and odometry in a sample map

The odometry isn't a perfect simulation as it simply integrates the command without taking into consideration friction or inertia, but for demonstration without having to operate a Turtlebot in a room, this is useful. The LiDar sensor data can then be visualized in Rviz.

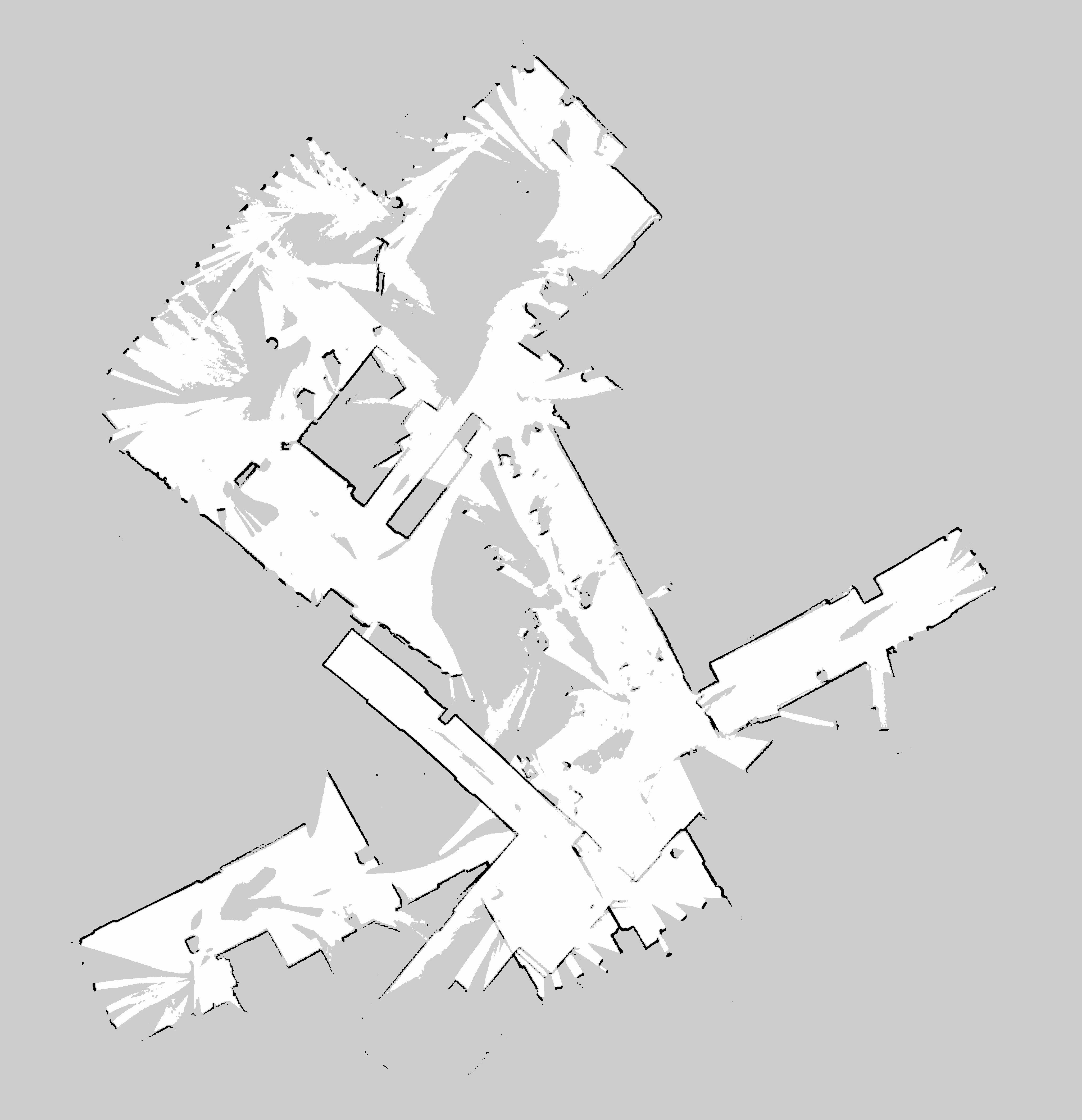

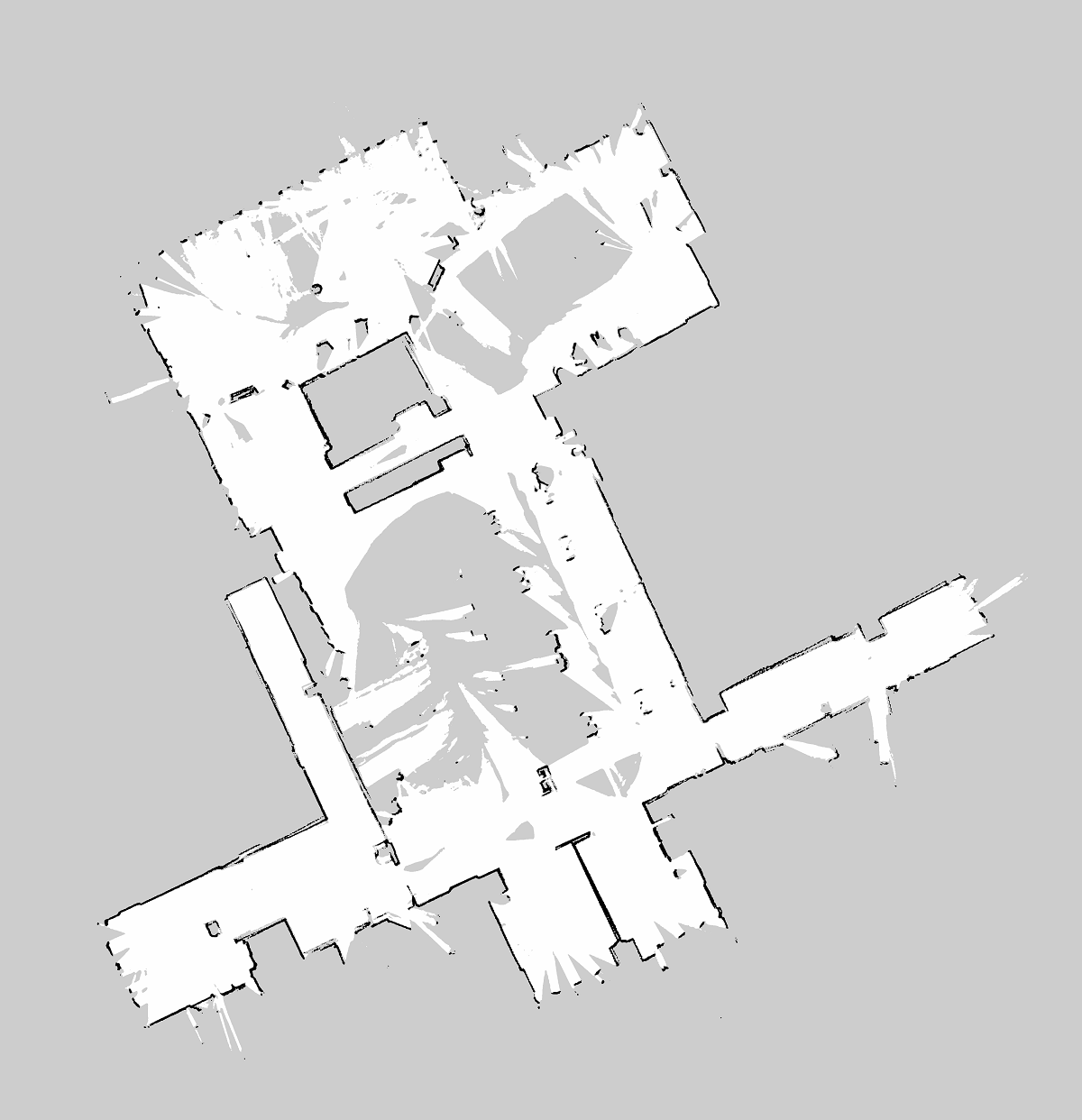

Then I was tasked with generating new maps using Google Cartographer. I first drove the Turtlebot in two buildings of Centrale Nantes to record bag files containing the sensor data of the onboard odometry, the tf data and the LiDar data. Then I tried using the ROS integration of Cartographer to generate the maps, however the results were appalling. After many iterations, the algorithm collapsed all the walls together in a giant mess. The parameters of the Cartographer algorithm didn't work at all for my setup. I followed the tunning guide of Cartographer and the documentation. I went through all the available parameters, tried a range of value for each, recorded the resulting maps, wrote a small guide of the interesting parameters to tune and finally created a lua configuration file that produced satisfying results.

Map of the cafeteria with same bag file without and with proper tunning

Finally, I experimented with 3D SLAM with a Kinect attached to the TurtleBot. After recording very heavy bag files of the robot driving in a small room, I tried to adapt my Cartographer configuration to create a 3D map. After multiple unsuccessful attempts, I gave up on Cartographer and searched for better suited software and libraries. I ended up using RTABmap in ROS. That worked really well and with the first try, I obtained a rather satisfying 3D point cloud map of the laboratory.